Are you gearing up for a career shift or aiming to ace your next interview? Look no further! We’ve curated a comprehensive guide to help you crack the interview for the coveted Scaler position. From understanding the key responsibilities to mastering the most commonly asked questions, this blog has you covered. So, buckle up and let’s embark on this journey together.

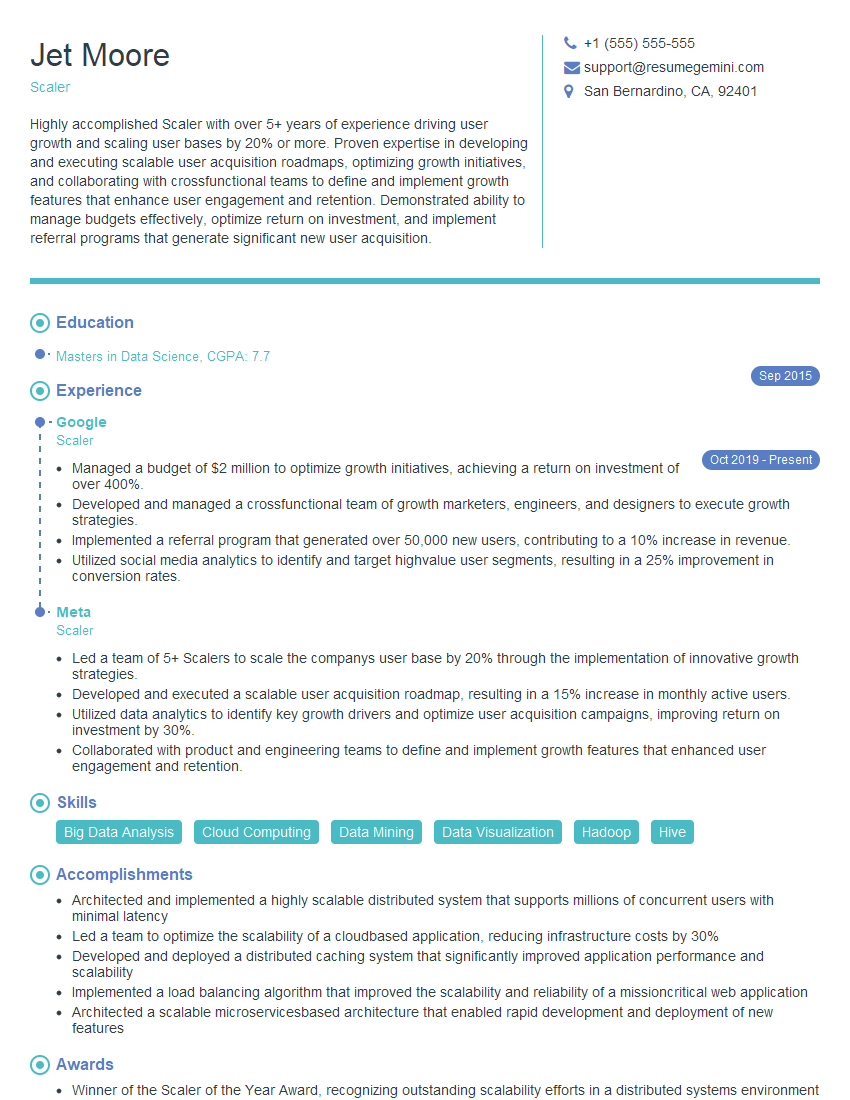

Acing the interview is crucial, but landing one requires a compelling resume that gets you noticed. Crafting a professional document that highlights your skills and experience is the first step toward interview success. ResumeGemini can help you build a standout resume that gets you called in for that dream job.

Essential Interview Questions For Scaler

1. Explain the concept of data locality in Apache Spark?

Data locality in Apache Spark refers to the strategy of placing data close to the computations that operate on it, which helps in reducing network traffic and improving performance.

- Data locality is achieved through techniques such as:

- Co-locating data and computations on the same node or rack

- Using data serialization formats that minimize network overhead.

- Benefits of data locality:

- Reduced network traffic and latency

- Improved performance and scalability

- Cost savings by optimizing resource utilization.

2. Describe the different types of transformations and actions in Apache Spark?

Transformations

- Lazy transformations: These transformations do not actually perform any computation until an action is triggered.

- Eager transformations: These transformations perform computation immediately and return a new Dataset.

Actions

- Collect: Returns all elements of the Dataset as an array

- Count: Returns the number of elements in the Dataset

- Show: Prints the first few elements of the Dataset to the console

3. How does Apache Spark handle data partitioning?

Apache Spark partitions data into smaller chunks for efficient processing. Partitioning is done based on the following factors:

- Input data size

- Number of executors

- Desired level of parallelism

Spark uses a hash-based partitioner by default, but custom partitioners can also be defined.

4. Explain the difference between Apache Spark RDD and DataFrame?

- RDD:

- Resilient Distributed Dataset (RDD) is a distributed collection of data elements

- RDDs are immutable and lazily evaluated.

- DataFrame:

- DataFrame is a structured collection of data organized into named columns

- DataFrames are mutable and eagerly evaluated.

5. How does Apache Spark handle fault tolerance?

Apache Spark uses a mechanism called lineage to recover from failures. Lineage tracks the dependencies between data transformations and actions.

- In case of a failure, Spark can use lineage to recompute only the affected data, instead of reprocessing the entire dataset.

- Spark also supports checkpointing, which periodically writes intermediate results to a reliable storage system for faster recovery.

6. Describe the role of executors and drivers in Apache Spark?

- Driver: The driver program is responsible for creating a SparkContext, which is the entry point to Apache Spark.

- Executors: Executors are processes that run on worker nodes and execute tasks.

- The driver divides the computation into tasks and assigns them to executors for parallel execution.

7. Explain how Apache Spark optimizes query execution?

- Cost-based optimizer: Spark uses a cost-based optimizer to choose the most efficient execution plan for queries.

- Adaptive query execution: Spark can dynamically adjust the execution plan during runtime based on factors such as data size and resource availability.

- Join optimization: Spark supports various join algorithms (e.g., broadcast hash join, sort-merge join) and chooses the optimal one based on data characteristics.

8. What are the different types of join operations supported by Apache Spark?

- Inner join: Returns rows that have matching values in both tables.

- Left outer join: Returns all rows from the left table, even if there are no matching values in the right table.

- Right outer join: Returns all rows from the right table, even if there are no matching values in the left table.

- Full outer join: Returns all rows from both tables, regardless of whether there are matching values.

9. How does Apache Spark handle data skewness?

Data skewness occurs when the data is unevenly distributed across partitions. Apache Spark addresses data skewness through techniques such as:

- Salting: Randomly distributing data across partitions to reduce skewness.

- Partition coalescing: Merging small partitions into larger ones to improve data distribution.

10. Explain the concept of wide transformations and narrow transformations in Apache Spark?

- Wide transformations: These transformations result in a new RDD that is wider (has more columns) than the input RDD.

- Narrow transformations: These transformations result in a new RDD that is narrower (has fewer columns) than the input RDD.

- Wide transformations trigger a shuffle operation, which can be expensive, while narrow transformations do not.

Interviewers often ask about specific skills and experiences. With ResumeGemini‘s customizable templates, you can tailor your resume to showcase the skills most relevant to the position, making a powerful first impression. Also check out Resume Template specially tailored for Scaler.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Great Savings With New Year Deals and Discounts! In 2025, boost your job search and build your dream resume with ResumeGemini’s ATS optimized templates.

Researching the company and tailoring your answers is essential. Once you have a clear understanding of the Scaler‘s requirements, you can use ResumeGemini to adjust your resume to perfectly match the job description.

Key Job Responsibilities

As a Scaler, you’ll play a pivotal role in driving business growth and expanding the company’s customer base.

1. New Business Development

You’ll be responsible for identifying, qualifying, and pursuing prospective clients that align with the company’s target market.

- Generate qualified leads through market research and targeted outreach

- Conduct thorough needs analysis to understand client requirements and pain points

2. Sales Execution

You’ll devise and execute effective sales strategies to convert leads into customers.

- Develop persuasive sales presentations and proposals that highlight product value and address client needs

- Negotiate contracts and close deals, ensuring compliance with company policies and legal requirements

3. Relationship Management

You’ll foster strong relationships with existing and new clients to ensure their satisfaction and drive repeat business.

- Maintain regular communication with clients to provide updates, address concerns, and up-sell additional products and services

- Identify and address client issues promptly and effectively, going above and beyond to resolve any challenges

4. Market Analysis and Research

You’ll stay abreast of industry trends and conduct market analysis to identify opportunities and develop tailored sales strategies.

- Monitor market trends, competitor activity, and customer feedback to gain insights and identify growth opportunities

- Conduct market research to understand target audience demographics, psychographics, and buying behavior

Interview Tips

To ace your Scaler interview, consider these preparation tips and strategies:

1. Research the Company and Industry

Demonstrate your interest and knowledge of the company’s products, services, and industry by conducting thorough research beforehand.

- Visit the company’s website, read industry news and articles, and check social media platforms

- Identify key industry trends and challenges, and prepare to discuss how you can leverage this knowledge in your role

2. Highlight Your Sales Skills and Experience

Emphasize your proven sales abilities and quantify your accomplishments with specific examples.

- Describe successful sales strategies you’ve implemented, highlighting your ability to identify customer needs and develop customized solutions

- Provide quantifiable results of your sales performance, such as increased revenue, closed deals, or market share growth

3. Demonstrate Your Business Acumen

Showcase your understanding of business principles and your ability to drive growth.

- Discuss your experience in market analysis, competitor benchmarking, and developing sales forecasts

- Explain how your strategies have contributed to overall business objectives, such as increased revenue, improved market share, or enhanced customer satisfaction

4. Practice Your Presentation Skills

Prepare a concise and engaging presentation that highlights your key skills and experiences.

- Use data, case studies, and examples to support your claims and demonstrate your value

- Practice delivering your presentation confidently and professionally, ensuring it’s within the allotted time limit

5. Prepare Thoughtful Questions

Asking insightful questions shows you’re engaged and interested in the role and company.

- Prepare questions that demonstrate your understanding of the industry and the company’s business strategy

- Ask about the company’s growth plans, market opportunities, and the specific contributions you can make

Next Step:

Now that you’re armed with interview-winning answers and a deeper understanding of the Scaler role, it’s time to take action! Does your resume accurately reflect your skills and experience for this position? If not, head over to ResumeGemini. Here, you’ll find all the tools and tips to craft a resume that gets noticed. Don’t let a weak resume hold you back from landing your dream job. Polish your resume, hit the “Build Your Resume” button, and watch your career take off! Remember, preparation is key, and ResumeGemini is your partner in interview success.