Are you gearing up for an interview for a Scrape Gatherer position? Whether you’re a seasoned professional or just stepping into the role, understanding what’s expected can make all the difference. In this blog, we dive deep into the essential interview questions for Scrape Gatherer and break down the key responsibilities of the role. By exploring these insights, you’ll gain a clearer picture of what employers are looking for and how you can stand out. Read on to equip yourself with the knowledge and confidence needed to ace your next interview and land your dream job!

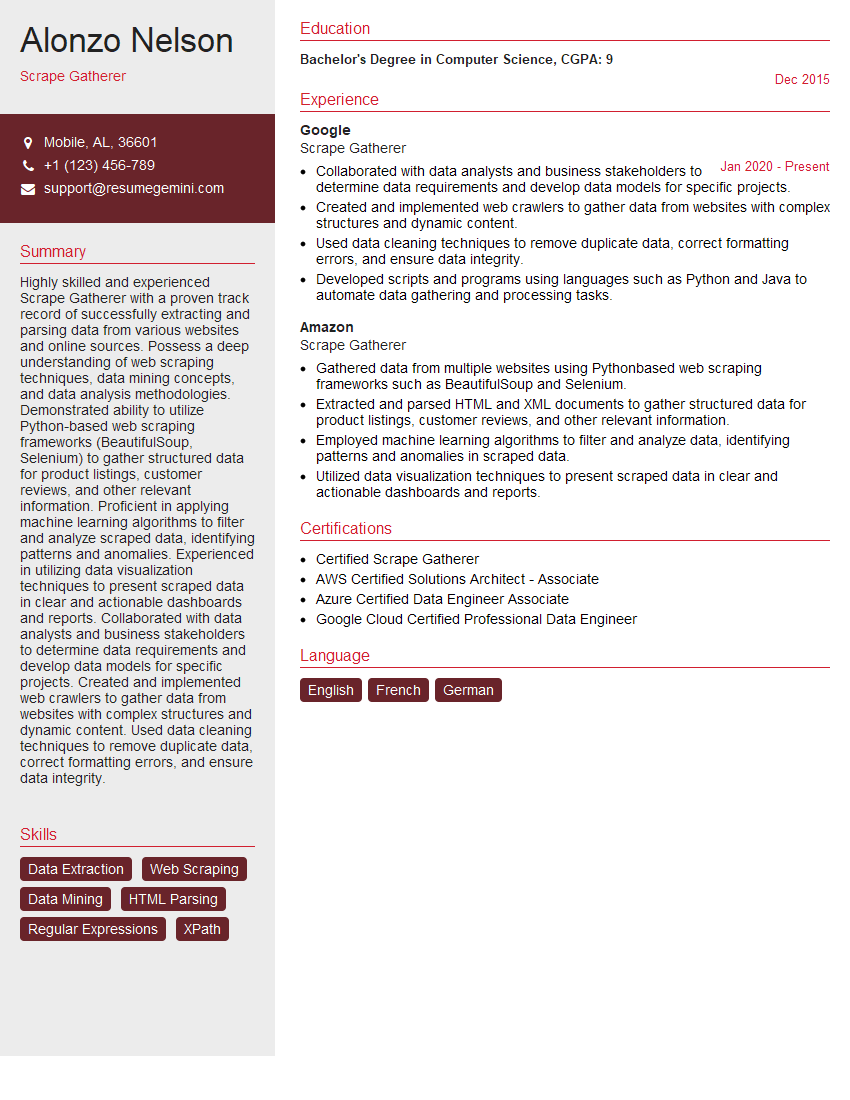

Acing the interview is crucial, but landing one requires a compelling resume that gets you noticed. Crafting a professional document that highlights your skills and experience is the first step toward interview success. ResumeGemini can help you build a standout resume that gets you called in for that dream job.

Essential Interview Questions For Scrape Gatherer

1. How would you approach scraping data from a website that uses AJAX to load content?

To scrape data from a website that uses AJAX, I would use a headless browser like Puppeteer or Selenium. These tools allow me to control the browser and execute JavaScript code, which allows me to interact with the website as a user would and retrieve the data needed.

2. What techniques would you use to deal with CAPTCHAs and other anti-scraping measures?

Understanding CAPTCHAs

- Analyze the type of CAPTCHA (e.g., text-based, image-based)

- Identify the purpose and mechanism behind the CAPTCHA

Countermeasures

- Use specialized tools or services that solve CAPTCHAs

- Implement machine learning models to automate CAPTCHA recognition

- Use CAPTCHA-solving proxies to bypass CAPTCHA challenges

3. How would you design a scalable and reliable web scraping system?

- Modular Architecture: Divide the system into smaller, independent modules for easier maintenance and scaling.

- Load Balancing: Distribute scraping tasks across multiple servers to handle high traffic and avoid bottlenecks.

- Caching: Store frequently accessed data to reduce the load on the scraping infrastructure.

- Error Handling: Implement robust error handling mechanisms to ensure the system can recover from temporary failures.

- Monitoring and Analytics: Regularly monitor the system’s performance and collect data to identify areas for improvement and optimization.

4. What are the ethical considerations to keep in mind when web scraping?

- Respect Robots.txt: Adhere to website guidelines and avoid scraping content from sites that prohibit it.

- Avoid Excessive Scraping: Limit the frequency and volume of scraping to prevent overloading website servers.

- Handle Sensitive Data Responsibly: Protect personal or confidential information gathered during scraping.

- Consider Copyright Laws: Ensure compliance with copyright laws and avoid scraping copyrighted content without permission.

- Maintain Transparency: Clearly disclose the purpose and methods of scraping to avoid misleading users or infringing on trademarks.

5. How would you handle dynamic websites that change their structure frequently?

- Regularly Monitor Website Changes: Use automated tools or manual testing to identify structural changes.

- Adapt Scraping Code: Modify the code to adjust to the new structure and extract the desired data.

- Use Flexible Scraping Techniques: Employ methods like DOM traversal or regular expressions to handle variations in the website’s structure.

- Consider Using a Headless Browser: Utilize headless browsers to render the website and extract data as a user would, reducing the impact of structural changes.

6. What are the advantages and disadvantages of using cloud-based web scraping services?

Advantages

- Scalability: Cloud services provide elastic resources to handle varying scraping demands.

- Reliability: Cloud platforms offer high uptime and redundancy, ensuring scraping continuity.

- Cost-effectiveness: Pay-as-you-go pricing models can save costs compared to maintaining in-house servers.

- Expertise: Cloud providers often have teams specializing in web scraping, offering technical support and optimization guidance.

Disadvantages

- Cost: Cloud services can be more expensive than self-hosted solutions for large-scale or long-term scraping.

- Data Privacy Concerns: Cloud providers may have access to scraped data, raising security and privacy issues.

- Limited Customization: Cloud services may not offer the same level of customization as in-house solutions.

7. How would you approach scraping data from a website that uses infinite scrolling?

- Detect Infinite Scrolling: Identify the mechanism used for infinite scrolling (e.g., JavaScript, AJAX).

- Simulate User Actions: Replicate the actions of a user scrolling through the page, triggering the loading of additional content.

- Use Automation Tools: Employ headless browsers or web scraping frameworks that support simulating user interactions and handling dynamic content.

- Monitor Page Changes: Track changes in the page’s DOM or network requests to determine when new data is loaded.

8. What strategies would you use to optimize the performance of your web scraping code?

- Minimize HTTP Requests: Reduce the number of requests sent to the website by combining multiple requests or using caching techniques.

- Use Parallel Processing: Distribute scraping tasks across multiple threads or processes to improve efficiency.

- Optimize Selector Queries: Use efficient CSS or XPath selectors to locate elements and avoid unnecessary DOM traversal.

- Utilize Caching: Store frequently accessed data in memory or on disk to reduce the need for repeated scraping.

- Monitor and Profile Code: Regularly monitor the performance of your code and identify areas for optimization.

9. How would you handle the situation when a website implements rate limiting measures?

- Detect Rate Limits: Monitor the website’s response to scraping requests and identify any rate limits imposed.

- Respect Rate Limits: Adhere to the specified rate limits to avoid triggering blocks or penalties.

- Implement Backoff Mechanisms: Gradually increase the time interval between requests after encountering rate limits.

- Use Proxies or IP Rotation: Utilize proxies or rotate IP addresses to bypass rate limiting based on a single IP.

10. What are the best practices for maintaining a web scraping system over time?

- Regular Monitoring: Continuously monitor the system’s performance and data quality to ensure it meets requirements.

- Website Changes Detection: Regularly check for website updates or structural changes that may impact scraping.

- Adapt to Changes: Modify the scraping code or strategies to accommodate website changes and maintain data accuracy.

- Maintain Documentation: Keep thorough documentation of the scraping process, including the tools and techniques used, to facilitate future maintenance and troubleshooting.

- Security Considerations: Implement necessary security measures to protect the system from unauthorized access or data breaches.

Interviewers often ask about specific skills and experiences. With ResumeGemini‘s customizable templates, you can tailor your resume to showcase the skills most relevant to the position, making a powerful first impression. Also check out Resume Template specially tailored for Scrape Gatherer.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Great Savings With New Year Deals and Discounts! In 2025, boost your job search and build your dream resume with ResumeGemini’s ATS optimized templates.

Researching the company and tailoring your answers is essential. Once you have a clear understanding of the Scrape Gatherer‘s requirements, you can use ResumeGemini to adjust your resume to perfectly match the job description.

Key Job Responsibilities

Scrape Gatherers are responsible for collecting data from a variety of online sources using web scraping techniques. They work closely with data analysts and other stakeholders to ensure that the data collected meets the desired requirements.

1. Data Collection

Scrape Gatherers use web scraping tools and techniques to collect data from websites and other online sources. They must be able to understand the structure of websites and the underlying code in order to extract data efficiently.

- Use web scraping tools to extract data from websites and other online sources

- Understand the structure of websites and the underlying code

- Develop and maintain web scraping scripts

2. Data Cleaning

Once data has been collected, Scrape Gatherers must clean and prepare it for analysis. This involves removing duplicate data, correcting errors, and converting data into a usable format.

- Clean and prepare data for analysis

- Remove duplicate data

- Correct errors

- Convert data into a usable format

3. Data Validation

Scrape Gatherers must validate the accuracy and completeness of the data they collect. This involves verifying data against other sources and checking for errors or inconsistencies.

- Validate the accuracy and completeness of data

- Verify data against other sources

- Check for errors or inconsistencies

4. Data Management

Scrape Gatherers are responsible for managing the data they collect. This involves organizing data, storing it securely, and providing access to authorized users.

- Organize and store data securely

- Provide access to authorized users

- Maintain data integrity

Interview Tips

Preparing for an interview for a Scrape Gatherer position can be daunting, but with the right preparation, you can increase your chances of success.

1. Research the company and the position

Before the interview, take some time to research the company and the position you are applying for. This will help you understand the company’s culture, goals, and the specific responsibilities of the role.

- Visit the company’s website

- Read the job description carefully

- Look for news articles and other information about the company

2. Practice your answers to common interview questions

There are a number of common interview questions that you are likely to be asked, such as “Tell me about yourself” and “Why are you interested in this position?” Practice answering these questions in advance so that you can deliver your responses confidently and clearly.

- Use the STAR method (Situation, Task, Action, Result) to answer behavioral questions

- Quantify your accomplishments whenever possible

- Be prepared to talk about your experience with web scraping tools and techniques

3. Be prepared to ask questions

Asking questions at the end of the interview shows that you are interested in the position and the company. It also gives you an opportunity to learn more about the role and the company culture.

- Ask about the company’s goals and objectives

- Ask about the specific responsibilities of the role

- Ask about the company’s culture and values

4. Dress professionally and arrive on time

First impressions matter, so dress professionally and arrive on time for your interview. This shows that you respect the interviewer’s time and that you are serious about the position.

- Wear a suit or business casual attire

- Arrive on time for your interview

- Be polite and respectful to everyone you meet

Next Step:

Now that you’re armed with interview-winning answers and a deeper understanding of the Scrape Gatherer role, it’s time to take action! Does your resume accurately reflect your skills and experience for this position? If not, head over to ResumeGemini. Here, you’ll find all the tools and tips to craft a resume that gets noticed. Don’t let a weak resume hold you back from landing your dream job. Polish your resume, hit the “Build Your Resume” button, and watch your career take off! Remember, preparation is key, and ResumeGemini is your partner in interview success.