Feeling lost in a sea of interview questions? Landed that dream interview for Scrapper but worried you might not have the answers? You’re not alone! This blog is your guide for interview success. We’ll break down the most common Scrapper interview questions, providing insightful answers and tips to leave a lasting impression. Plus, we’ll delve into the key responsibilities of this exciting role, so you can walk into your interview feeling confident and prepared.

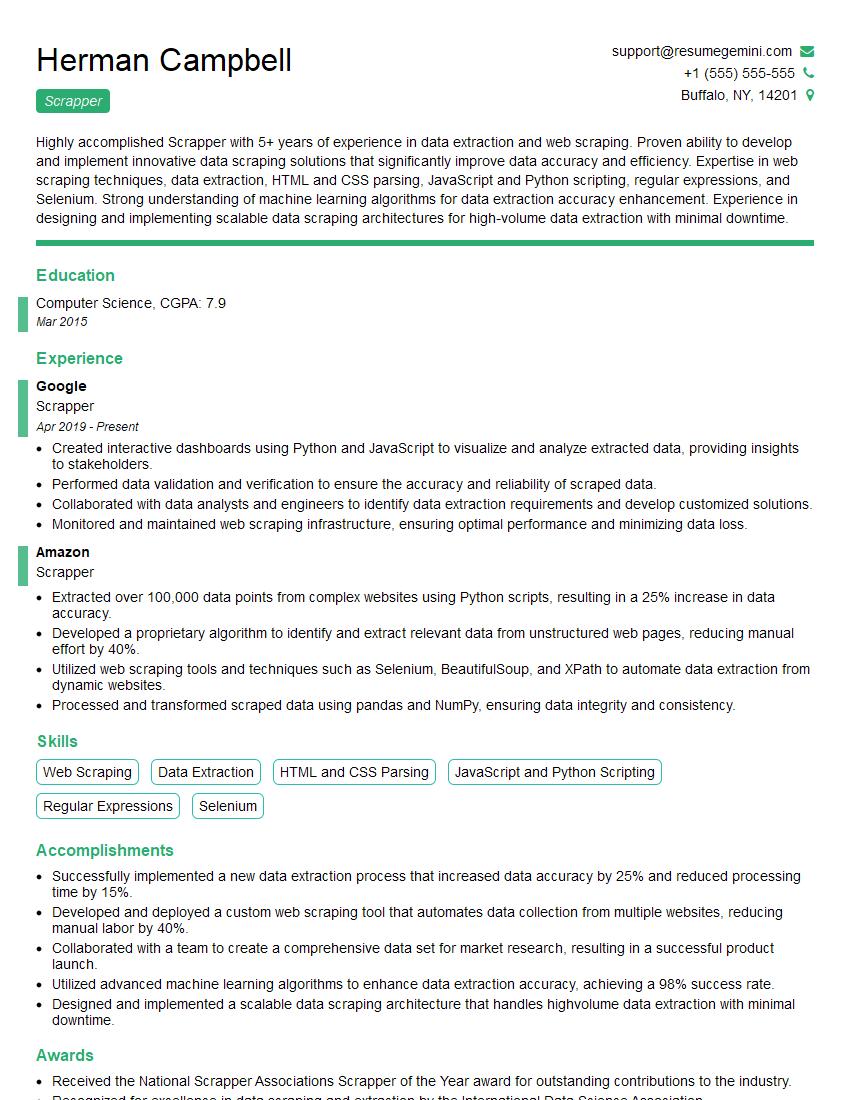

Acing the interview is crucial, but landing one requires a compelling resume that gets you noticed. Crafting a professional document that highlights your skills and experience is the first step toward interview success. ResumeGemini can help you build a standout resume that gets you called in for that dream job.

Essential Interview Questions For Scrapper

1. What are the different types of web scraping techniques?

There are several web scraping techniques, some of the common ones include:

- HTTP GET requests: Sending an HTTP GET request to retrieve the HTML content of a web page.

- DOM parsing: Using libraries like BeautifulSoup or lxml to parse the HTML content and extract the desired data.

- Selenium: Using a headless browser like Selenium to interact with a web page and extract data dynamically.

- Web scraping APIs: Utilizing specialized APIs provided by companies like Scrapinghub or Zyte to extract data from web pages.

- Screen scraping: Taking screenshots of web pages and using OCR (Optical Character Recognition) to extract text content.

2. What are the challenges associated with web scraping and how do you overcome them?

Handling dynamic content

- Use JavaScript rendering techniques like Selenium or Puppeteer to execute JavaScript and retrieve dynamic content.

- Employ headless browsers like PhantomJS or SlimerJS to simulate browser behavior and render dynamic content.

Dealing with CAPTCHAs and anti-scraping measures

- Use CAPTCHA-solving services or libraries to bypass CAPTCHAs.

- Rotate IP addresses or use residential proxies to avoid being blocked by anti-scraping mechanisms.

Extracting data from complex or paginated websites

- Analyze the website’s structure and pagination patterns to identify the data extraction logic.

- Use recursion or loops to navigate through paginated pages and extract data from each page.

3. What are the ethical considerations when web scraping?

Ethical considerations when web scraping include:

- Respecting robots.txt: Adhering to the website’s robots.txt file to avoid overloading servers and violating website policies.

- Avoiding excessive scraping: Scraping data responsibly and not overwhelming websites with excessive requests.

- Using data responsibly: Ensuring that scraped data is used for legitimate purposes and not for illegal or unethical activities.

- Citing sources: Acknowledging the source of the scraped data and giving credit to the website owners.

4. Have you worked on any web scraping projects? Can you share your experience?

Yes, I have worked on several web scraping projects, including:

- E-commerce data extraction: Scraping product information, prices, and reviews from e-commerce websites to help businesses monitor competitors and conduct market research.

- Social media data analysis: Scraping social media platforms to collect data on user demographics, engagement, and sentiment for social media marketing campaigns.

- News aggregation: Scraping news articles from multiple sources to create a comprehensive news feed for a personalized news app.

5. What are the different tools and libraries you are proficient in for web scraping?

I am proficient in using a variety of tools and libraries for web scraping, including:

- Python libraries: BeautifulSoup, lxml, Selenium, Requests

- Node.js libraries: Cheerio, Puppeteer, Axios

- Web scraping APIs: Scrapinghub, Zyte, Bright Data

- Headless browsers: PhantomJS, SlimerJS

- Cloud platforms: AWS, Azure, Google Cloud for deploying and scaling scraping pipelines

6. How do you handle web scraping errors and ensure data quality?

To handle web scraping errors and ensure data quality, I employ the following strategies:

- Error handling: Implement error handling mechanisms to catch and handle exceptions that may occur during scraping.

- Data validation: Validate the scraped data to ensure it meets the expected format and criteria.

- Data cleaning: Perform data cleaning techniques such as removing duplicates, correcting data types, and handling missing values.

- Regular maintenance: Regularly monitor and maintain the scraping scripts to ensure they continue to function as intended.

7. What are the best practices for web scraping?

Best practices for web scraping include:

- Respect robots.txt: Adhere to the website’s robots.txt file to avoid overloading servers.

- Avoid excessive scraping: Scraping data responsibly and not overwhelming websites with excessive requests.

- Use headless browsers: Employ headless browsers like PhantomJS or SlimerJS to simulate browser behavior and reduce the risk of detection.

- Rotate IP addresses: Use residential proxies or IP rotation services to avoid being blocked due to suspicious activity.

- Handle CAPTCHAs: Implement techniques to bypass CAPTCHAs, such as using CAPTCHA-solving services or headless browsers.

8. What are the limitations of web scraping?

Limitations of web scraping include:

- Dynamic content: Websites with dynamic content may require advanced techniques like Selenium or Puppeteer to extract data.

- CAPTCHA and anti-scraping measures: Some websites implement CAPTCHAs or anti-scraping mechanisms to prevent automated scraping.

- Data quality: The quality of scraped data can vary depending on the website’s structure and the scraping techniques used.

- Legal and ethical considerations: Web scraping must be done responsibly and in compliance with the website’s terms of service and ethical guidelines.

9. What are the advantages of using a web scraping API?

Advantages of using a web scraping API include:

- Ease of use: Web scraping APIs provide a simple and convenient way to access scraped data without the need for complex programming.

- Scalability: APIs can handle large-scale scraping tasks and provide access to high-quality data.

- Reliability: APIs are often maintained by experienced professionals, ensuring reliability and consistency in data extraction.

- Compliance: APIs can help ensure compliance with website terms of service and ethical guidelines by adhering to best practices.

10. How do you keep up with the latest trends and developments in web scraping?

I stay up-to-date with the latest trends and developments in web scraping through the following methods:

- Online forums and communities: Participating in online forums and communities like Stack Overflow and Reddit.

- Technical blogs and articles: Reading technical blogs and articles from industry experts and thought leaders.

- Conferences and webinars: Attending industry conferences and webinars to learn about new techniques and best practices.

- Open source projects: Contributing to and learning from open source web scraping projects on platforms like GitHub.

Interviewers often ask about specific skills and experiences. With ResumeGemini‘s customizable templates, you can tailor your resume to showcase the skills most relevant to the position, making a powerful first impression. Also check out Resume Template specially tailored for Scrapper.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Great Savings With New Year Deals and Discounts! In 2025, boost your job search and build your dream resume with ResumeGemini’s ATS optimized templates.

Researching the company and tailoring your answers is essential. Once you have a clear understanding of the Scrapper‘s requirements, you can use ResumeGemini to adjust your resume to perfectly match the job description.

Key Job Responsibilities

Scrappers are responsible for gathering and extracting data from various sources, such as websites, documents, and databases. Their work is crucial for various industries, including market research, financial analysis, and data mining.

1. Data Extraction

Scrappers use specialized tools and techniques to extract data from websites and other sources. They identify the relevant data, such as product prices, customer reviews, or financial statements, and extract it in a structured format.

2. Data Cleaning and Processing

Once the data is extracted, scrappers clean and process it to remove duplicates, errors, and inconsistencies. They ensure that the data is accurate, complete, and organized in a way that makes it easy to analyze and interpret.

3. Data Analysis

Scrappers may also be involved in analyzing the extracted data to identify trends, patterns, and insights. They use their technical skills and knowledge of data analysis techniques to extract valuable information from the data.

4. Report Generation

Scrappers often create reports and presentations based on the data they have extracted and analyzed. These reports provide insights and recommendations that can help businesses make informed decisions.

Interview Tips

Preparing for an interview for a Scrapper role requires a combination of technical skills and soft skills. Here are some tips to help you ace your interview:

1. Highlight Your Technical Skills

Be prepared to discuss your experience with data extraction tools and techniques. Explain how you approach data cleaning and processing tasks, and demonstrate your understanding of data analysis concepts.

2. Showcase Your Project Experience

If you have worked on any personal or academic projects involving data scraping, be sure to highlight them in your interview. Provide specific examples of the challenges you faced and the solutions you implemented.

3. Practice Your Soft Skills

Scrappers often work in teams and collaborate with other departments. Be prepared to demonstrate your communication, teamwork, and problem-solving skills. Explain how you handle deadlines and work under pressure.

4. Research the Company

Take the time to research the company you are interviewing with. Understand their business, industry, and the role of data scraping within their organization. This will help you tailor your answers to the specific needs of the position.

Next Step:

Now that you’re armed with the knowledge of Scrapper interview questions and responsibilities, it’s time to take the next step. Build or refine your resume to highlight your skills and experiences that align with this role. Don’t be afraid to tailor your resume to each specific job application. Finally, start applying for Scrapper positions with confidence. Remember, preparation is key, and with the right approach, you’ll be well on your way to landing your dream job. Build an amazing resume with ResumeGemini